Project Introduction

Based on YOLOv12, the detection a

nd analysis system for abnormal exam cheating behavior realizes real-time identification, annotation, statistical analysis, and record retention of multiple types of abnormal/cheating behaviors in the examination room video.System Overview

With the continuous development of education informatization, the traditional manual proctoring method has gradually exposed problems such as high labor cost, low monitoring efficiency and strong subjectivity in the actual examination process. In order to improve the intelligence and standardization of examination proctoring, this paper designs and implements a test abnormal behavior detection and analysis system based on YOLOv12 deep learning algorithm.

The system uses the latest YOLOv12 object detection algorithm as the core detection engine, which significantly improves detection accuracy and inference speed compared to the previous model, which can meet the needs of real-time monitoring. The system can automatically identify and analyze 12 typical abnormal behaviors in the video in the examination room, including answering in advance, tilting the head left and right, tilting the head backward, standing in the exam, passing suspicious items, picking up suspicious items, carrying suspicious items, putting hands under the table and burying their heads, candidates entering and leaving the examination room midway, raising their hands, destroying test papers, and continuing to answer after the exam.

In terms of system implementation, a visual graphical user interface is developed based on the PySide6 framework, which supports multiple working modes such as video file detection, real-time camera detection, and static image detection, and combines with SQLite database to achieve persistent storage and management of detection results. The main functions of the system include: multi-source video input and processing, real-time abnormal behavior detection and annotation, examination time management and violation detection, examination room and invigilation information configuration, statistical analysis of test results, and automatic recording and storage of abnormal behavior detection videos.

Experimental and test results show that the system can accurately identify various abnormal behaviors in the examination room in practical application scenarios, with high detection accuracy, stable average frame rate above 30 FPS, and system response speed to meet real-time monitoring requirements. The overall system has a friendly interface, convenient operation, and strong practicability and promotion value, which can provide effective technical support for the examination invigilation, reduce the workload of invigilators, and improve the fairness and standardization of the examination.

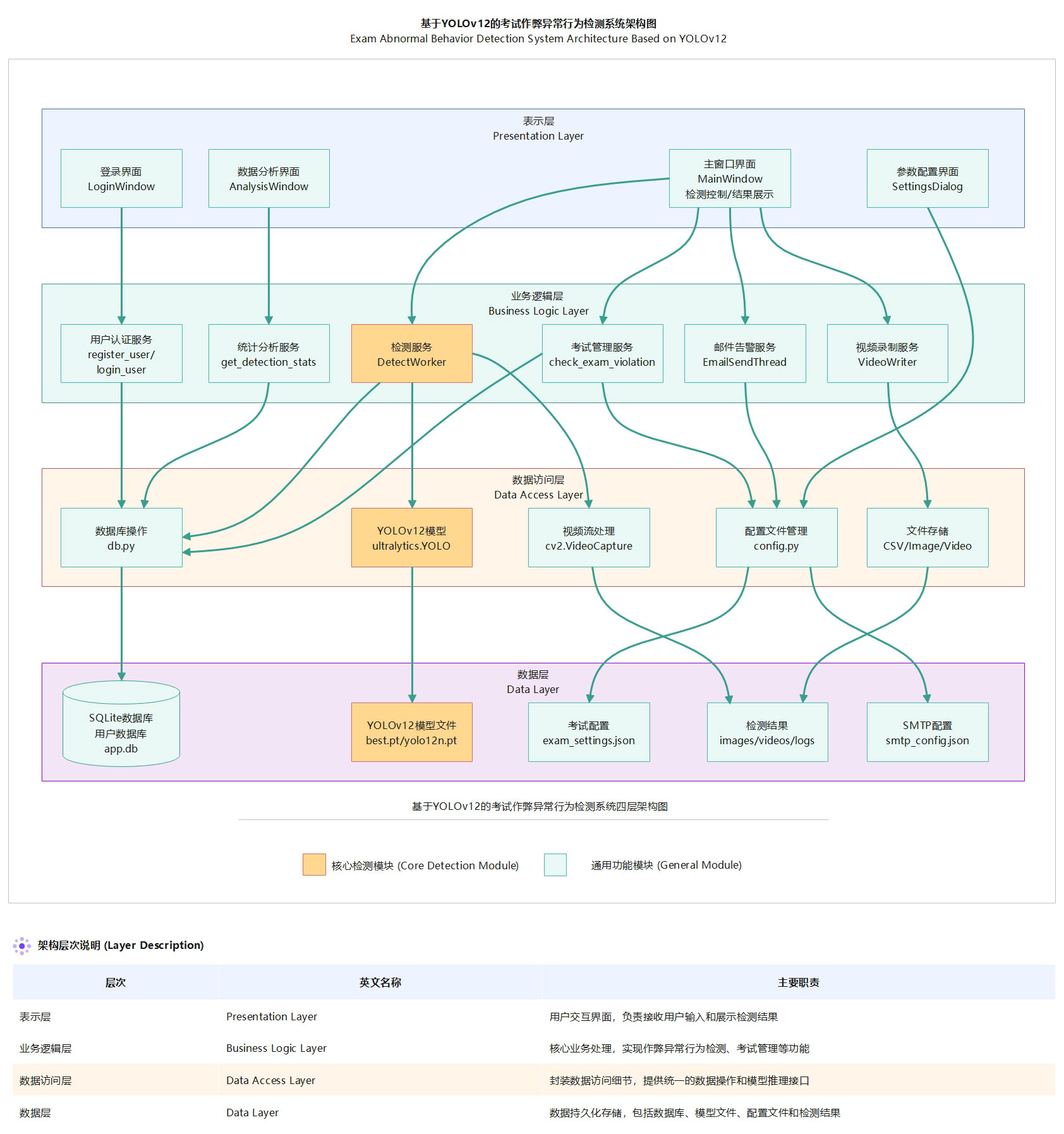

System architecture

The system adopts a classic four-layer architecture design:

Figure 1 Four-layer architecture diagram of the test cheating abnormal behavior detection system

Core highlights

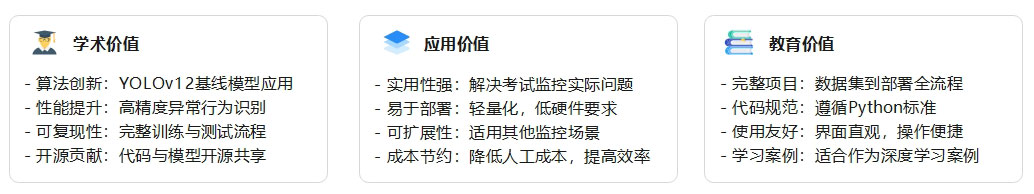

This section provides a quick overview of the system’s core technical values and application highlights, helping you quickly understand the unique benefits of your project. Whether you’re a technologist, researcher, or decision-maker, you can quickly access key information to determine if the system meets your needs.

Algorithm characteristics

The system uses the YOLOv12n official baseline model as the core detection algorithm, which has the following characteristics:

– Attention Center: Area Attention, low overhead + large sensory field, improve the representation of complex scenes.

– Feature aggregation: R-ELAN enhances fusion and gradient transfer, balancing accuracy and stability.

– Multi-scale detection: Multi-scale prediction, small/occluded targets are more friendly.

– Lightweight deployment: YOLOv12n ≈ 2.6M parameters and ≈ 6.5 GFLOPs(B), suitable for real-time and edge deployments.

Performance breakthrough

Through 150 full rounds of training on the exam abnormal behavior recognition dataset (3,824 training sets + 1,092 validation sets), the YOLOv12 baseline model achieved excellent recognition performance:

图2 基线模型性能分析图

系统特色

本系统基于YOLOv12深度学习架构,在高精度识别的基础上,注重实用性和易用性,提供完整的功能模块和友好的用户体验。

技术价值

本项目的技术创新不仅具有学术意义,更具有广泛的应用价值和教育价值。

核心技术

基于 YOLO12 实时目标检测框架,构建融合轻量化特征提取、注意力增强机制、多尺度特征融合与优化回归损失的检测模型,实现对 12 类考试异常行为的高精度实时识别。

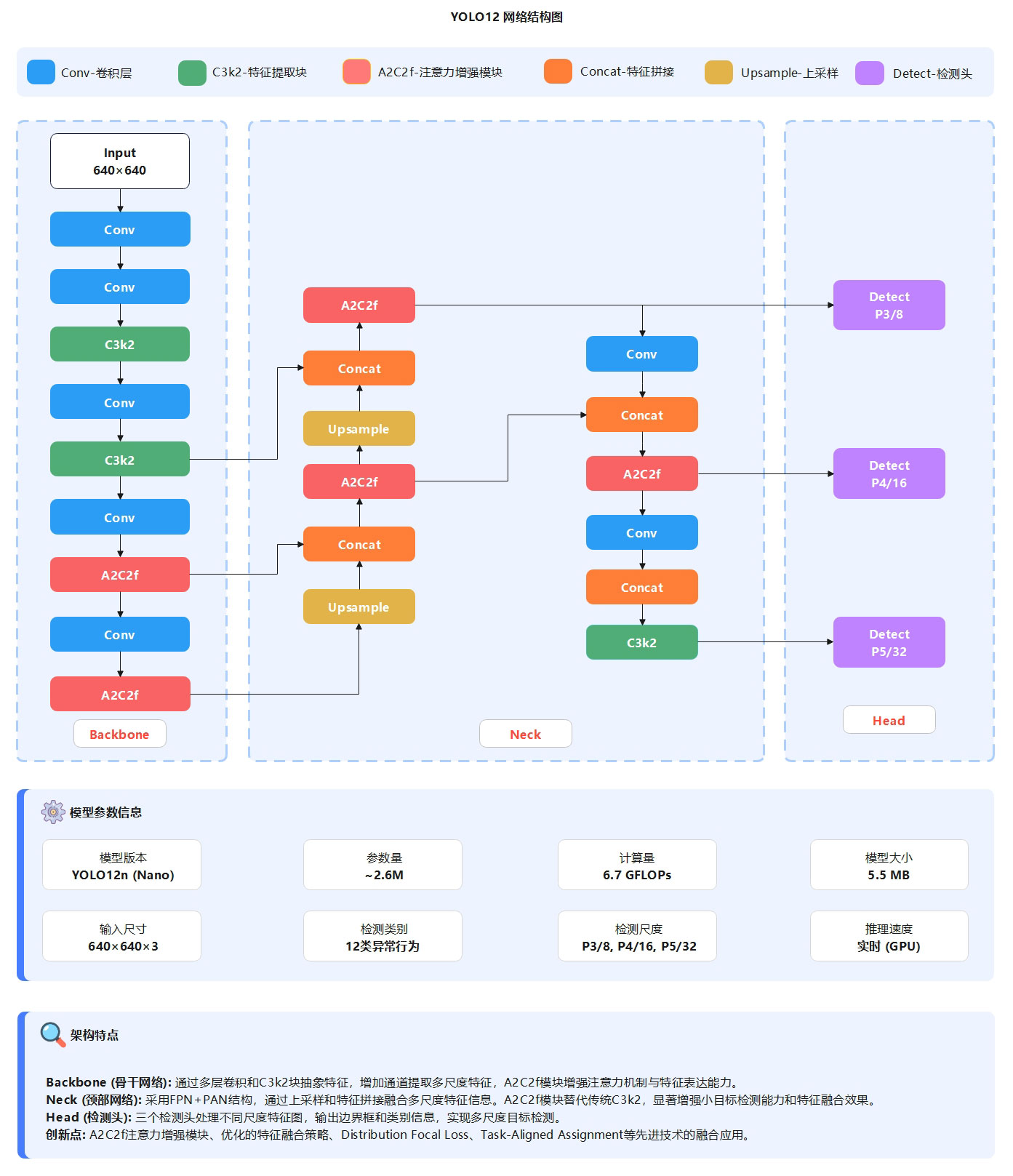

算法详解

本系统采用 Ultralytics 最新发布的 YOLOv12n(Nano)模型 作为核心检测算法。YOLOv12 采用经典的 Backbone–Neck–Head 三段式架构(见图)。Backbone 以 640×640 输入图像为基础,通过两层初始卷积完成下采样,并利用 2 个 C3k2 轻量化模块 逐步降低特征图分辨率、提升通道维度(64 → 512),实现从低层纹理到高层语义的多尺度特征提取。中后段引入 2 个 A2C2f 注意力增强模块(通道维度 512 → 1024),通过自适应注意力机制强化考生异常动作、可疑物品等关键区域特征,相比传统模块具有更强的特征表达能力和上下文建模能力。最终输出 P3/8、P4/16、P5/32 三个尺度特征图,分别对应小、中、大目标。

图3 YOLO12网络架构图

Neck 部分采用 FPN+PAN 双向特征金字塔实现多尺度特征融合:FPN自顶向下将高层语义信息逐级传递至低层,提升小目标(如手部动作、小型可疑物品)检测能力;PAN自底向上将低层细节与定位信息反馈至高层特征,增强大目标(如站立、离座等全身动作)检测精度。Neck 中大量使用 A2C2f模块替代传统特征融合模块,显著提升了特征融合质量和检测性能。

Head 由三个独立的解耦检测头组成,分别对三种尺度特征进行预测,输出目标边界框坐标、12类异常行为概率(提前作答、左右偏头、向后偏头、考试站立、传递可疑物品、捡可疑物品、携带可疑物品、手放桌下并埋头、考生中途出入考场、举手、销毁试卷、考后继续作答)及置信度。系统采用 Anchor-Free 检测机制,边界框回归使用DFL(Distribution Focal Loss)提升定位精度,分类分支采用 Varifocal Loss 缓解类别不平衡,并结合 Task-AlignedAssignment 动态分配正负样本以对齐分类与定位任务。

该模型参数量仅 2.6M(2,602,288 参数),计算量 6.7 GFLOPs,模型大小约 5.5 MB;在 GPU 环境下推理速度可达 50+ FPS,在保证检测精度的同时具备良好的轻量化与实时性。经过 150轮训练后,模型在考试异常行为检测数据集上取得了卓越性能:mAP@0.5 达 99.50%,mAP@0.5:0.95 达 99.48%,精确率99.86%,召回率 99.69%,适用于考场实时监控与异常行为识别场景。

技术优势分析

YOLO12n 在 YOLO11、YOLOv10 与 YOLOv8 的基础上进行了系统性优化,通过融合 A2C2f 注意力增强模块 与 C3k2 轻量化模块,在保证检测精度的同时提升特征表达能力,并结合 2×2 卷积下采样 有效降低参数量与计算复杂度。相较于 YOLO11 中的 C2PSA 与 SPPF 模块,A2C2f 采用自适应注意力机制,增强全局建模与多尺度特征融合能力,并在 Neck 部分得到广泛应用。检测头采用 解耦设计,引入 DFL 与 TAA 优化回归与样本分配策略,进一步提升小目标及复杂场景下的检测性能。实验结果表明,YOLO12n 在参数效率、推理速度与检测精度之间实现了更优平衡,在考试异常行为检测任务中取得 mAP@0.5:0.95 为 99.48% 的性能表现。

性能表现

YOLO12n基线模型以2.6M参数量、6.7 GFLOPs计算量实现高效推理,在考试异常行为检测任务上达到99.50% mAP@0.5和99.48% mAP@0.5:0.95的卓越精度,精确率99.86%,召回率99.69%,支持GPU加速和CPU部署,兼顾轻量化、高精度与实时性的完美平衡。

模型性能分析

YOLO12n 基线模型以 2.6M 参数量、6.7 GFLOPs 计算量实现高效推理,在 考试异常行为检测任务 上达到 99.50% mAP@0.5 和 99.48% mAP@0.5:0.95 的卓越精度,精确率 99.86%,召回率 99.69%,支持 GPU 加速 和 CPU 部署,兼顾轻量化、高精度与实时性的完美平衡。

The model features a lightweight design, containing only 2.6M parameters and 6.7 GFLOPs compute, and a model file size of approximately 5.5MB, making it suitable for deployment on resource-constrained edge devices. While maintaining high accuracy, the model has good real-time performance and supports multiple hardware platforms such as CPU and GPU, providing efficient and reliable technical support for the intelligent detection system of abnormal behavior in the exam.

Key Indicators (Note: Real Data)

During the 150 rounds of training of the YOLO12 baseline model, the mAP@0.5:0.95 index steadily increased from the initial 51.87% to the final 99.48%, an increase of 47.61%. The training process showed obvious three-stage characteristics: the rapid ascent stage (Epoch 1-20) achieved a jump from 51.87% to 97.07%, the stable convergence stage (Epoch 20-100) increased from 97.07% to 99.44%, and the fine tuning stage (Epoch 100-150) finally reached 99.48% and stabilized.

Fig.4 mAP50-95 curve of YOLO12 training process

The curve fully demonstrates the learning ability and convergence characteristics of the model in the test abnormal behavior detection task, and verifies the effectiveness of the training strategy. Ultimately, the 99.48% mAP@0.5:0.95 and 99.50% mAP@0.5 performances demonstrate the model’s exceptional performance under rigorous evaluation criteria.

Summary of performance benefits

The YOLO12 baseline model demonstrates excellent comprehensive performance in exam abnormal behavior detection tasks. Through the lightweight design of 2.6M parameters and 6.7 GFLOPs, the model achieved high-precision recognition of 99.50% mAP@0.5 and 99.48% mAP@0.5:0.95, with an accuracy rate of 99.86%, a recall rate of 99.69%, and a false identification rate and missed detection rate within 0.5%. After 150 rounds of full training, the model stably converged on the training set and validation set, and the mAP@0.5:0.95 increased from the initial 51.87% to 99.48%, an increase of 47.61%, fully verifying the model’s learning ability and generalization performance. This model not only has high accuracy, but also supports CPU/GPU multi-platform deployment, which is suitable for real-time video streaming processing and edge device applications, providing an efficient, reliable, and easy-to-deploy technical solution for the intelligent detection system of abnormal behavior in the exam

System functions

The system provides four core functions: video detection, real-time detection, data analysis, and parameter configuration, to realize intelligent identification, real-time monitoring, data statistics and visual analysis of abnormal behavior in the exam.

Feature overview

Based on the YOLO12 deep learning model, this system realizes the intelligent identification and analysis of abnormal behavior in the exam. The system adopts a modern graphical user interface (GUI), provides two working modes: video recognition and real-time camera recognition, and integrates functions such as data statistical analysis, recognition record management, and result visualization, providing efficient and convenient technical support for application scenarios such as examination room monitoring, abnormal behavior detection, and exam management.

Video detection capabilities

The video recognition mode supports frame-by-frame recognition of the recorded video file (MP4, AVI, MOV format), after the user clicks the “Video Detection” button to select the video file, the system automatically reads the video stream and performs real-time target recognition for each frame, displays the annotated video frame, the current recognized frame rate (FPS) and cumulative statistics in the interface, uses DetectWorker multi-threaded asynchronous processing technology to avoid interface lag, and supports automatic saving of the recognized video file (with annotation). It also records the types and distribution of abnormal behaviors in the video, and automatically captures and saves pictures when abnormal behavior is detected

Real-time detection capabilities

Real-time detection mode supports connecting to local cameras or webcams for real-time exam abnormal behavior identification, automatically scanning and detecting available camera devices (index 0-9) when the system starts, after the user clicks the “real-time detection” button and selects the designated camera, the system performs real-time video stream recognition (supports GPU acceleration for smooth processing), real-time display of recognition results and confidence level, and automatically triggers alarms when abnormal behavior is detected (status indicator display, automatic capture and saving), Identify statistics and anomalous behavior type distributions are updated in real time, and all detection results are automatically saved to the database.

Data statistics and analysis

The data analysis module provides visual display and statistical analysis functions of identification data, users click the “Data Analysis” button to open an independent analysis window, you can view the total number of detections, types of abnormal behaviors, detection frequency, average confidence and other key indicators, through bar charts, pie charts, statistical cards and other forms to visually display the data distribution, including data overview, abnormal behavior analysis, detailed statistics three tabs, support query history identification records (stored in SQLite database), view detection source distribution (video/ camera), abnormal behavior type distribution statistics (Top 12), and clearing current user records to achieve persistent storage and comprehensive analysis of identification data.

Records management capabilities

The record management module integrates the storage and query functions of recognition results, and the system automatically saves detailed data such as time, image, abnormal behavior type, confidence level, bounding box coordinates, detection source and other detailed data to the SQLite database for each recognition, and users can query historical statistics through the data analysis module, support query by user, view the distribution of abnormal behavior, detection source statistics and other functions, and automatically save the identified image and captured image to the save_data directory for traceability query. The inspection video automatically saves the annotated results to realize the whole process from recognition to data management.

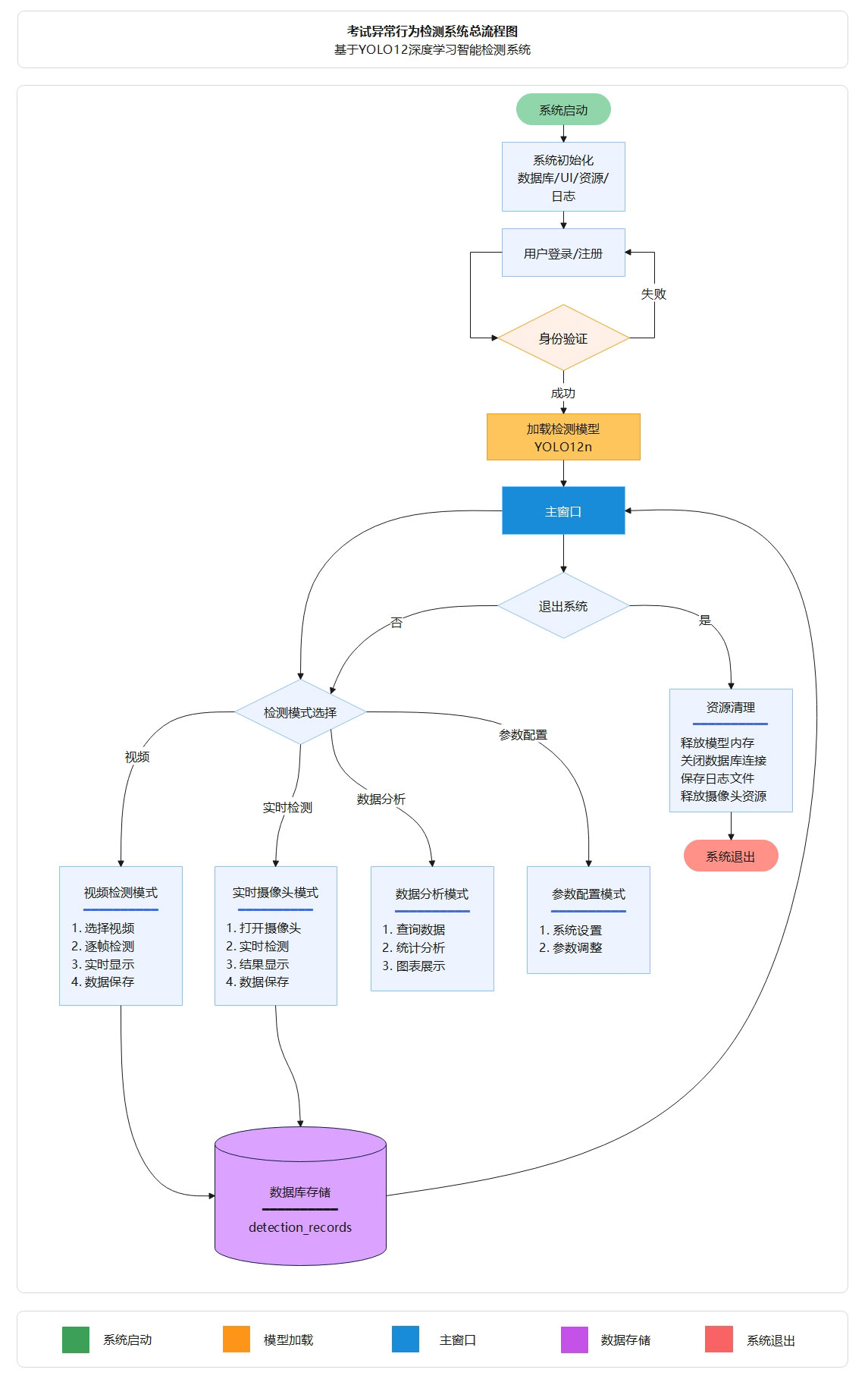

System architecture

The system is developed using Python 3.12, based on the Ultralytics YOLO12 deep learning framework to achieve object detection, using PySide6 (Qt for Python) to build a modern graphical user interface, using OpenCV for image and video processing, using DetectWorker multi-threaded asynchronous processing technology to ensure interface fluency, and using SQLite database to achieve persistent data storage and query, the system architecture is clear, Modular design for easy function expansion and maintenance.

Fig.5 General flow chart of the test cheating abnormal behavior detection and analysis system

System advantages:

Based on the YOLO12 deep learning model, this system realizes the intelligent identification and analysis of abnormal behavior in the exam. The system adopts YOLO12n lightweight detection network, which achieves mAP@0.5 = 99.50% and mAP@0.5: 0.95 = 99.48% recognition accuracy on the verification set, with an accuracy rate of 99.86% and a recall rate of 99.69%, and the number of model parameters is only 2.6M, the computational amount is 6.7 GFLOPs, and the model file is 5.5MB, which is suitable for edge device deployment. It supports real-time video stream processing, built-in FPS monitoring and inference time statistics, and supports GPU acceleration for smooth response. It provides two recognition modes: video file and real-time camera, and is equipped with data statistical analysis and visualization functions to meet the needs of examination room monitoring application scenarios.

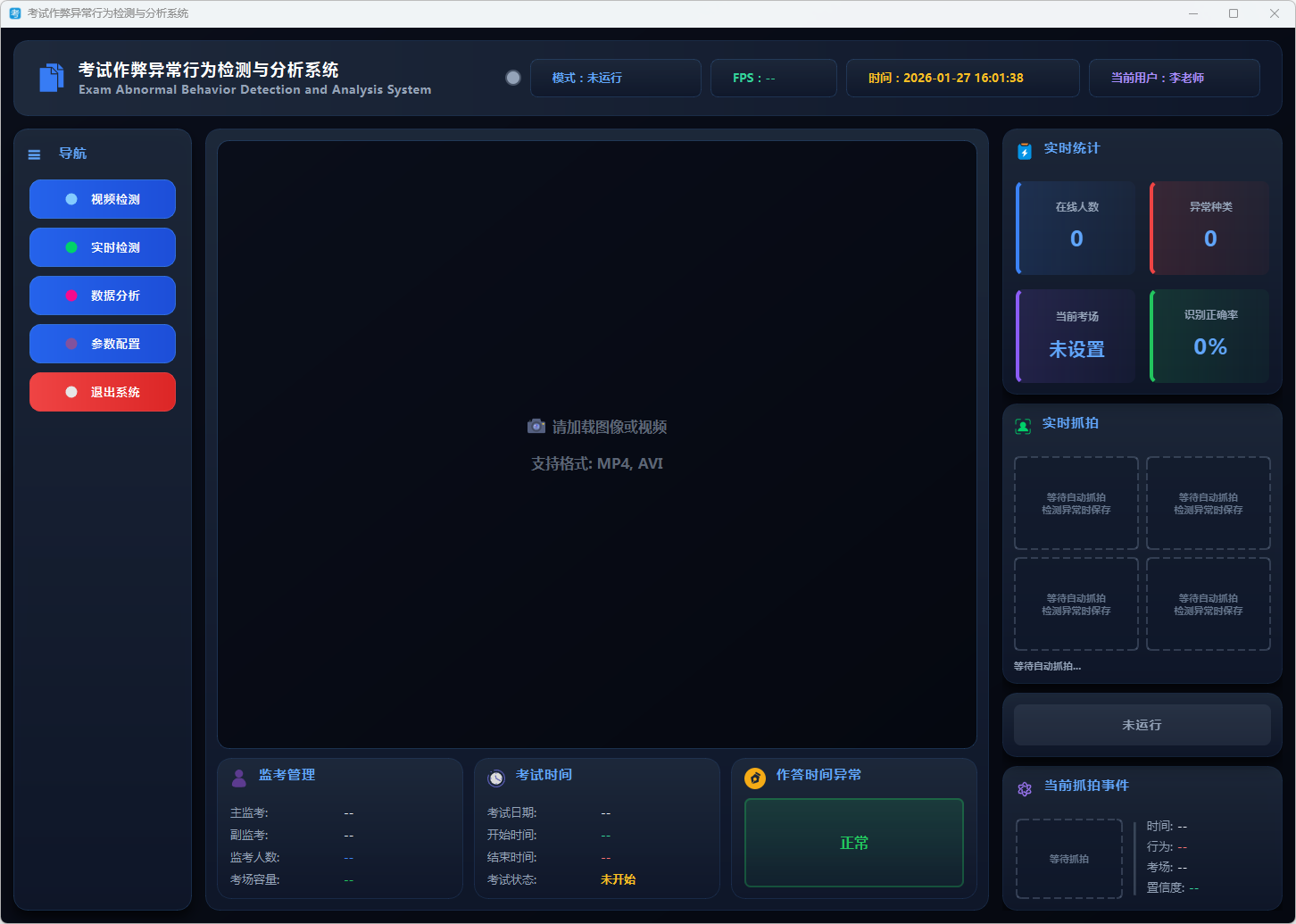

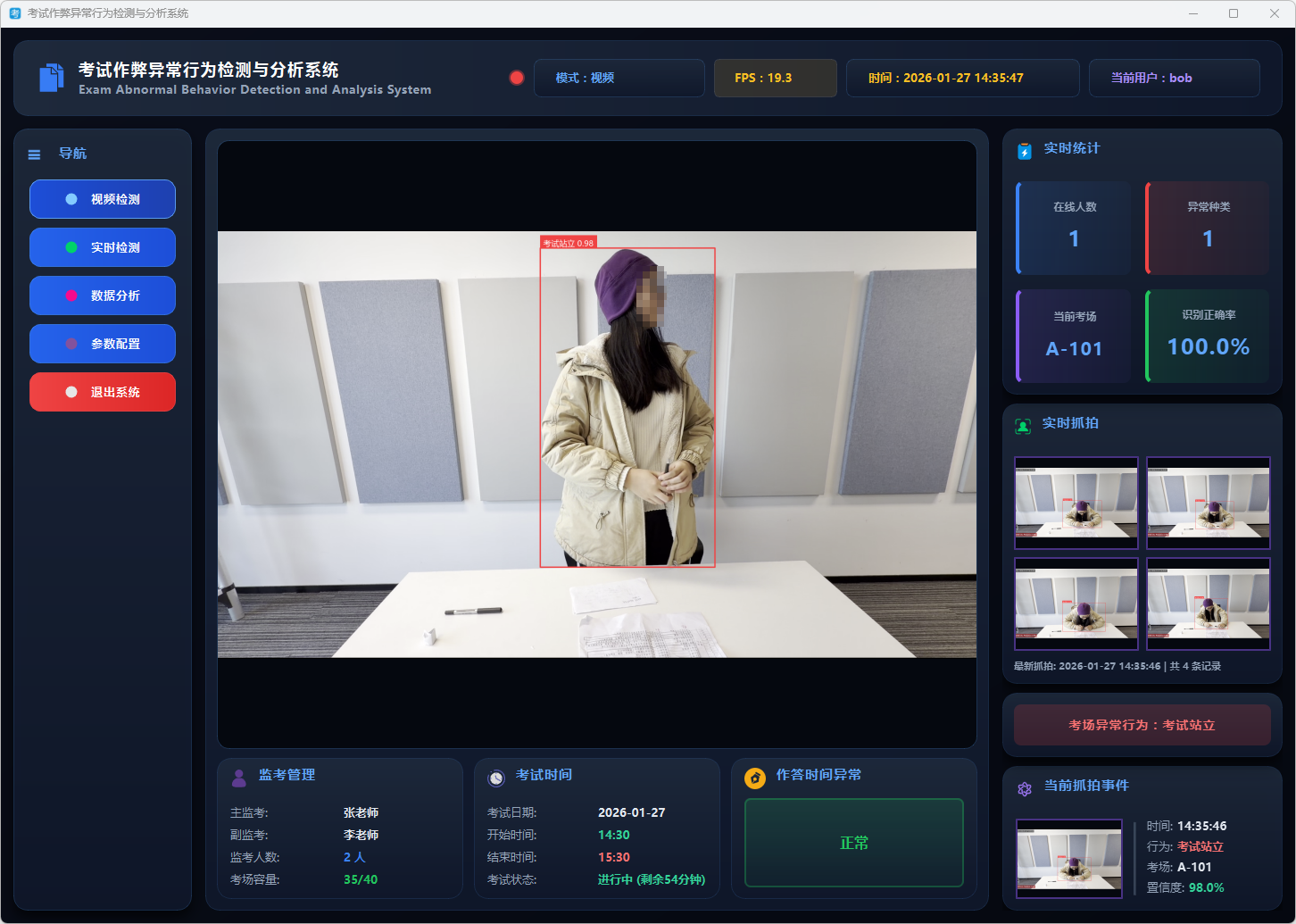

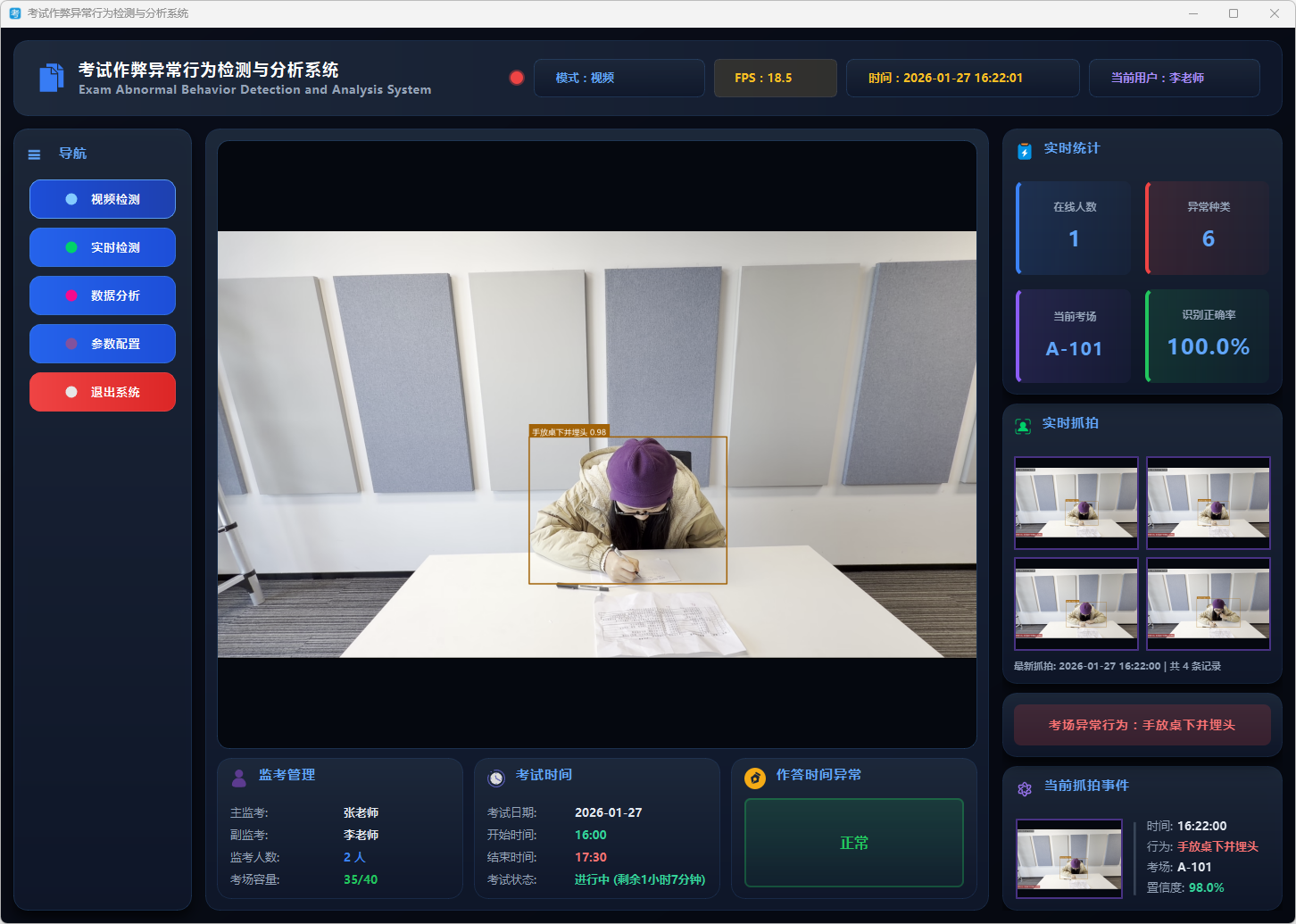

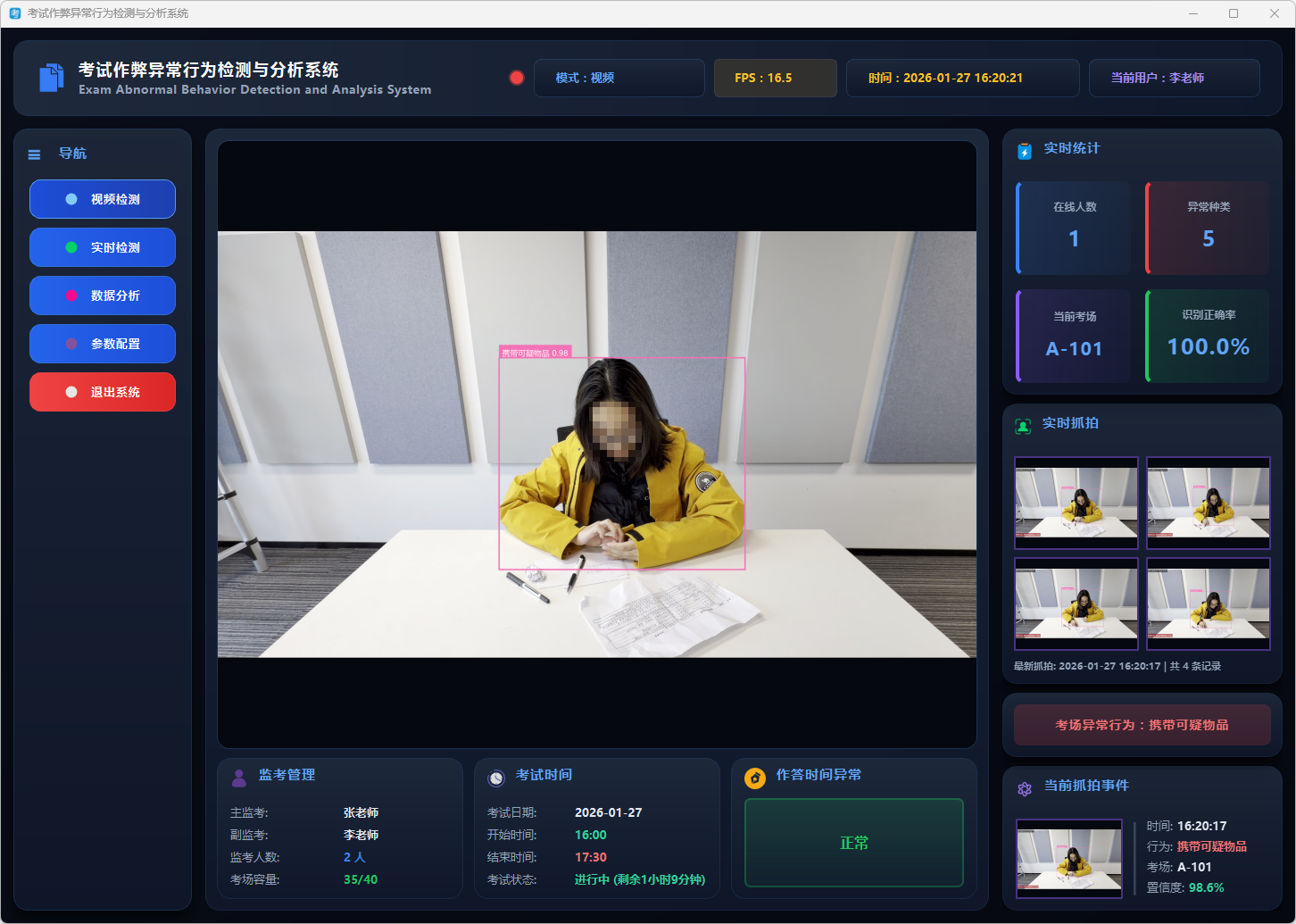

Run the show

The system interface is divided into three areas: function buttons and parameter settings on the left, central recognition screen display, and statistical information and record management on the right, providing complete functions such as video/real-time recognition, data analysis, result display, and record query.

Detection effect display

Login Interface:

图6 登录主界面

用户登录界面,展示系统入口

Figure 7 Registration main interface

User registration interface, new users create an account

System Operation Module:

Figure 8 The main interface of the system

图9 视频检测:提前作答

Figure 10 Video detection: Delivering suspicious items

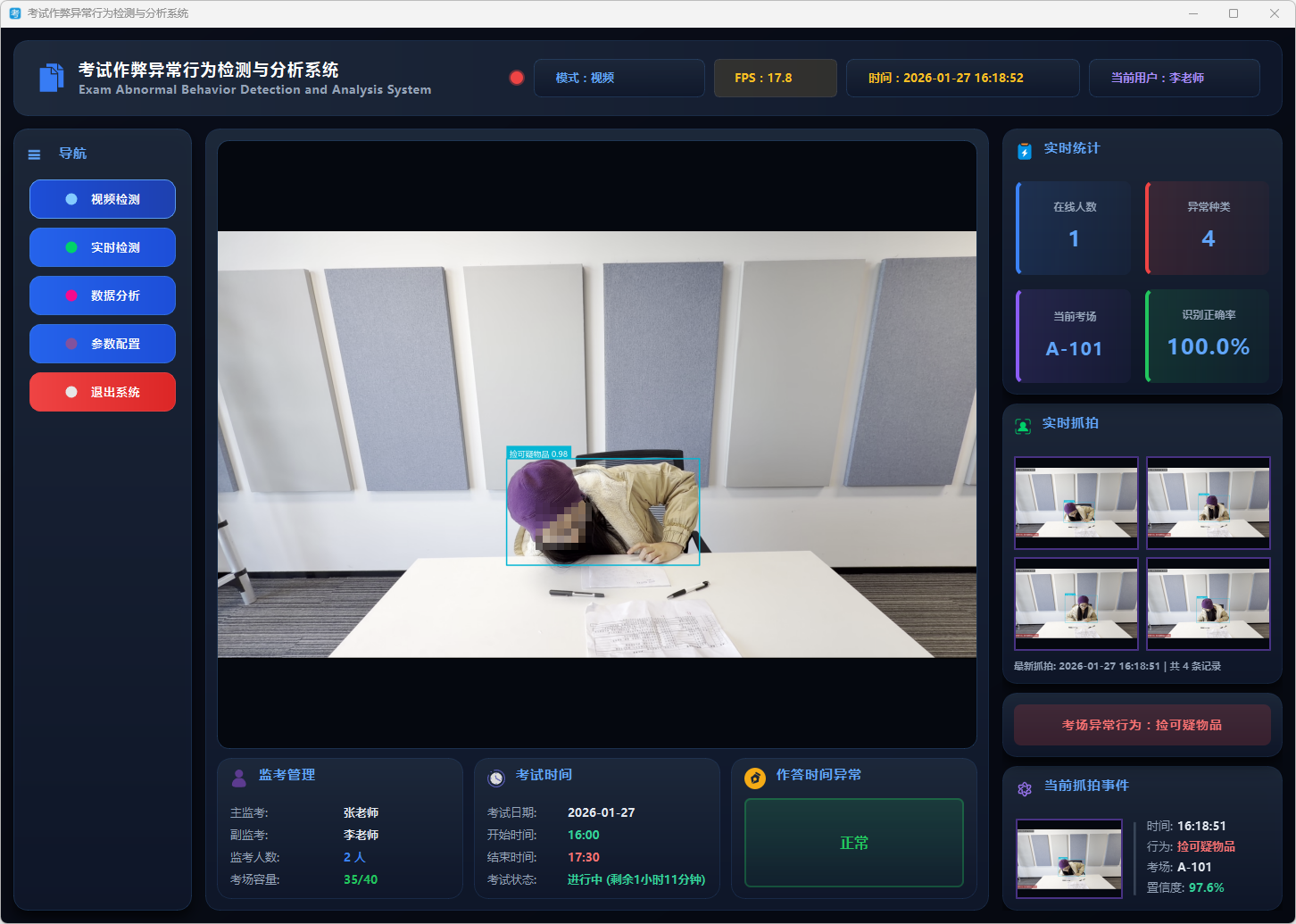

Figure 11 Video detection: Picking up suspicious items

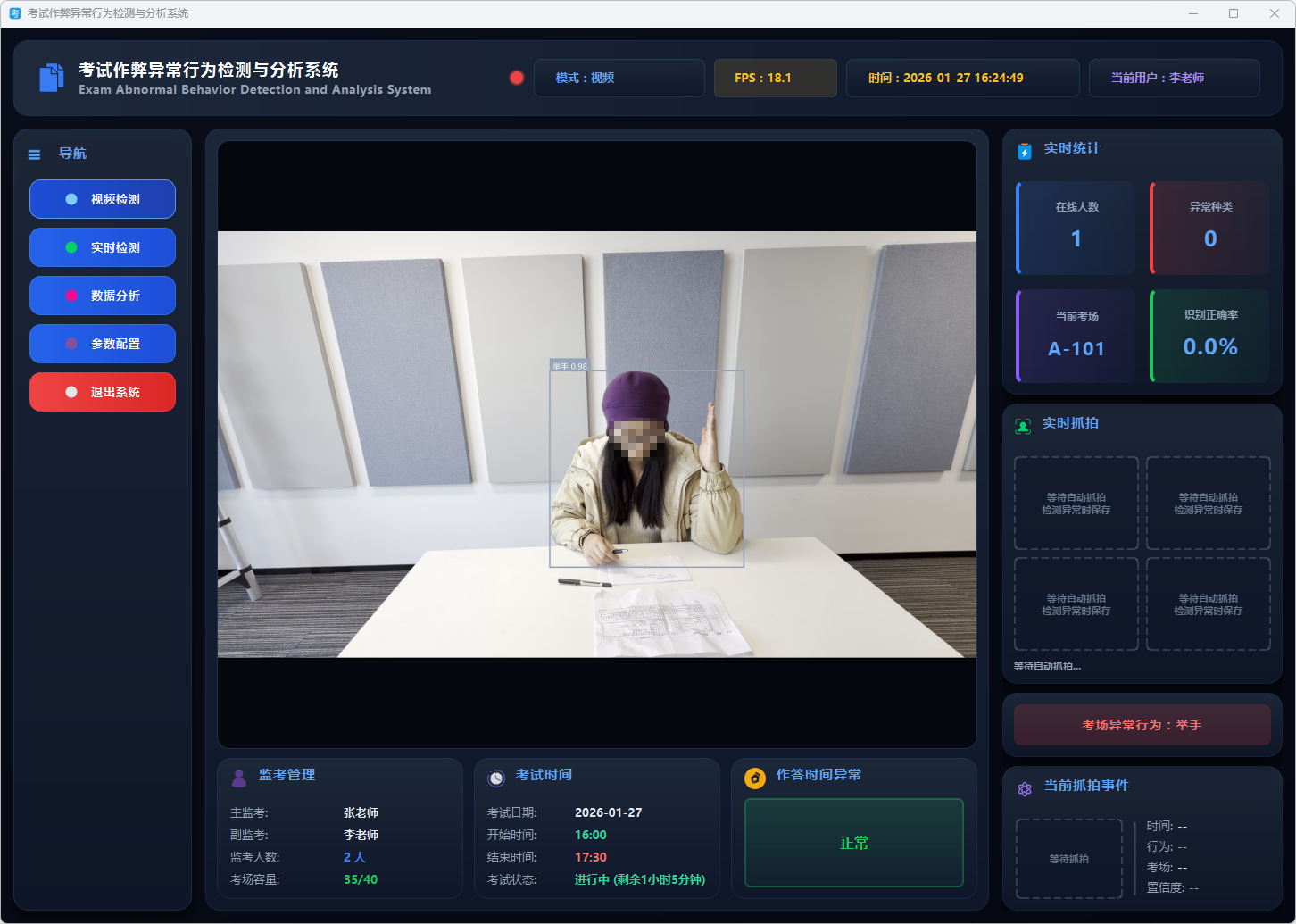

Figure 12 Video detection: raise hand (teacher needs to determine the compliance of behavior on the spot)

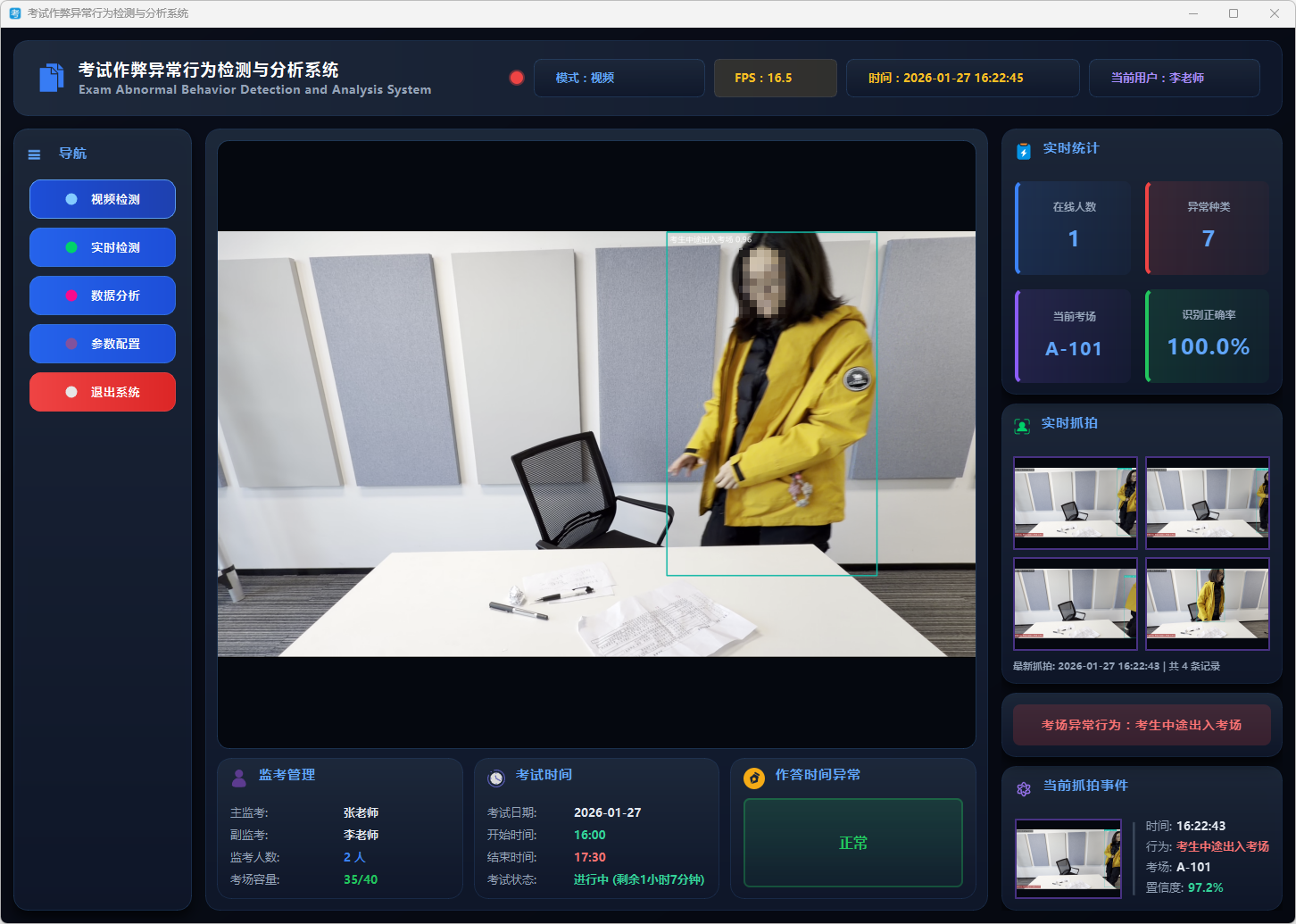

Figure 13 Video detection: Candidates enter and exit the examination room midway

Figure 14 Video detection: Exam standing

Figure 15 Video inspection: Hand under the table and bury head

Figure 16 Video detection: Head tilted backward

Figure 17 Video detection: destroy the test paper

Comments (0)